July 18, 2007

Emergent Intentionality

Or, My Fancy Rationale for Indulging in Conspiracy Theories.

Or, My Fancy Rationale for Indulging in Conspiracy Theories.

New Scientist just ran a story on The Lure of Conspiracy Theory. They claim that:

Conspiracy theories can have a valuable role in society. We need people to think “outside the box”, even if there is usually more sense to be found inside the box. The close scrutiny of evidence and the dogged pursuit of alternative explanations are key features of investigative journalism and critical scientific thinking. Conspiracy theorists can sometimes be the little guys who bring the big guys to account – including multinational companies and governments.

I strongly agree with this position, and consider the natural tension between dogged skepticism and flagrant bootstrapping to be a good methodology for fostering creative scientific thought.

But I think the NS story misses an important angle of conspiracy theories that I have been wondering about lately.

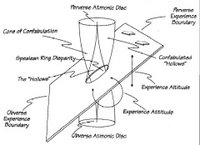

The question I have been wondering about is to what extent can group behavior can be understood or characterized as conscious/willful/intentional. How much ideology do members of a group need to share before their behavior can be understood (and perhaps predicted) as an intentional agent? Is postulating intentionality a useful heuristic for understanding group behavior?

I am not going to follow this idea too far in this post, but this position provides an alternative perspective on theories like the idea that all Peace Corp volunteers are CIA agents, and why theories like this become so popular. Our cognitive capacities are poorly equipped to percieve complex emergent behaviors, and postulating intentionality may serve as a natural (and useful) strategy for capturing these patterns.

I personally trace the philosophical genealogy of this idea to Daniel Dennett’s Intensional Stance, but a friend of mine pointed out that this idea can also be found in Madison’s Federalist Paper #10. The main idea behind Dennett’s intensional stance is that we can bracket the deep, hard, ontological questions about the nature of consciousness and simply observe how useful taking the intensional stance is as a heuristic for understanding other people’s behavior. We posit intentionality which yields reliable predictions about agents (philosophical agents, not the ones working for letter agencies) in the world around us. And we don’t limit the intensional stance to other people either – we regularly adopt this stance with animals and machines, often to great utility.

For whatever its worth, labeling something a conspiracy theory sometimes seems like a pejorative, non-rational critique. Heck, Al-Queda is a conspiracy theory (and an open source project, according to Bruce Sterling’s SXSW ’07 Rant), but perversely, it’s the Power of Nightmare‘s attempt to dispel this fabrication that is labeled the conspiracy.

But, I really want to live in a universe in which we actually landed on the moon.

Filed by jonah at 12:55 am under air,fire,metaphysics

Filed by jonah at 12:55 am under air,fire,metaphysics

2 Comments

2 Comments

My visits to the

My visits to the  The Alchemist in me feels compelled to respond to the excellent documentary that aired on PBS the other week entitled

The Alchemist in me feels compelled to respond to the excellent documentary that aired on PBS the other week entitled  Well, I called it:

Well, I called it:

Today I presented last year’s

Today I presented last year’s